Featured

Table of Contents

- – Strapi CMS: A Game-Changer for Scalable Digita...

- – Evaluating Performance: Key Measurements for P...

- – Optimizing the Art of Information Strategy in...

- – The Investment Model of Bespoke Online Projec...

- – Leveraging Modern Development for Performance

- – Modern Content Management: A Breakthrough fo...

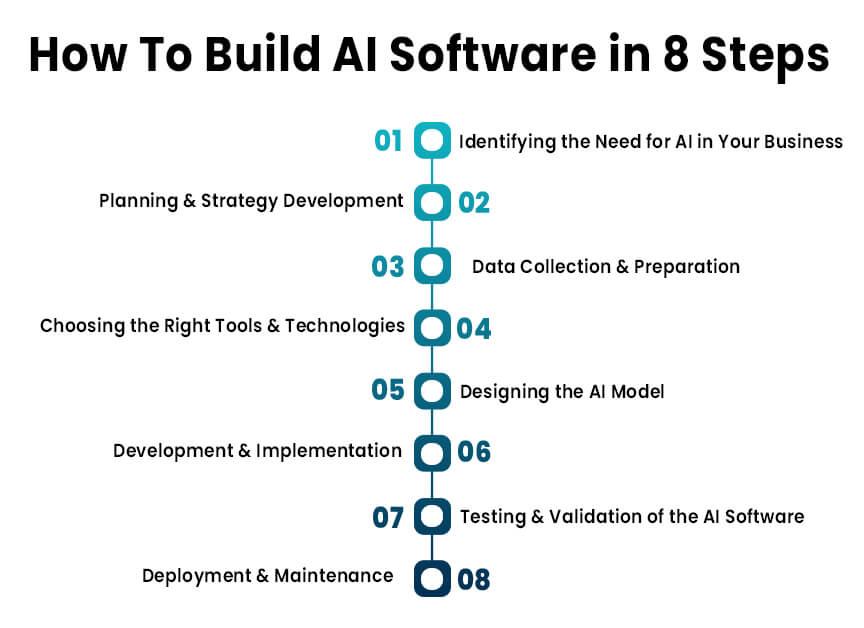

It isn't a marathon that demands study, analysis, and experimentation to determine the function of AI in your service and make sure protected, honest, and ROI-driven option deployment. To aid you out, the Xenoss team developed a straightforward framework, explaining exactly how to construct an AI system. It covers the key factors to consider, difficulties, and aspects of the AI job cycle.

Your goal is to identify its duty in your operations. The easiest method to approach this is by going backward from your purpose(s): What do you want to achieve with AI implementation?

Strapi CMS: A Game-Changer for Scalable Digital Platforms

Choose usage instances where you have actually already seen a convincing presentation of the modern technology's capacity. In the finance sector, AI has actually confirmed its value for scams detection. Maker discovering and deep learning designs outmatch standard rules-based fraudulence detection systems by using a lower price of incorrect positives and showing much better outcomes in acknowledging brand-new sorts of scams.

Researchers concur that synthetic datasets can boost personal privacy and representation in AI, specifically in delicate industries like health care or finance. Gartner forecasts that by 2024, as much as 60% of information for AI will certainly be synthetic. All the gotten training data will certainly after that have to be pre-cleansed and cataloged. Usage consistent taxonomy to develop clear data family tree and afterwards keep track of how various customers and systems use the supplied data.

Evaluating Performance: Key Measurements for Platform Implementations Investments

Additionally, you'll need to split offered data into training, recognition, and examination datasets to benchmark the established design. Fully grown AI development teams total many of the data management processes with information pipes an automatic sequence of steps for data intake, processing, storage space, and succeeding accessibility by AI designs. Example of information pipeline style for data warehousingWith a robust information pipe style, business can process numerous information documents in milliseconds in close to real-time.

Amazon's Supply Chain Finance Analytics group, in turn, enhanced its data engineering work with Dremio. With the current setup, the firm established new essence change tons (ETL) workloads 90% faster, while question speed boosted by 10X. This, consequently, made data a lot more easily accessible for countless simultaneous individuals and device understanding projects.

Optimizing the Art of Information Strategy in User Conversion Websites

The training process is complicated, as well, and prone to concerns like example efficiency, security of training, and catastrophic interference troubles, to name a few. Successful commercial applications are still couple of and mainly come from Deep Technology companies. are the foundation of generative AI. By utilizing a pre-trained, fine-tuned version, you can swiftly train a new-gen AI formula.

Unlike conventional ML structures for all-natural language handling, structure models require smaller sized labeled datasets as they currently have actually installed knowledge throughout pre-training. That stated, foundation versions can still generate incorrect and irregular results. Especially when put on domain names or jobs that vary from their training data. Educating a foundation model from the ground up also requires massive computational sources.

The Investment Model of Bespoke Online Projects against Generic Solutions

Properly, the version does not generate the preferred results in the target setting due to differences in specifications or arrangements. If the model dynamically maximizes rates based on the overall number of orders and conversion rates, however these criteria significantly change over time, it will no much longer give accurate ideas.

Rather, most maintain a database of design variations and do interactive model training to considerably boost the top quality of the last item., and only 11% are successfully released to manufacturing.

After that, you benchmark the interactions to determine the design variation with the highest precision. is one more crucial method. A design with too few functions struggles to adapt to variants in the information, while as well many functions can bring about overfitting and even worse generalization. Very correlated attributes can additionally trigger overfitting and weaken explainability strategies.

Leveraging Modern Development for Performance

However it's additionally the most error-prone one. Just 32% of ML projectsincluding refreshing models for existing deploymentstypically reach release. Deployment success throughout various maker finding out projectsThe factors for fallen short deployments differ from absence of executive support for the job due to uncertain ROI to technical problems with making certain secure design procedures under enhanced loads.

The team needed to make sure that the ML model was very offered and served extremely customized suggestions from the titles offered on the individual gadget and do so for the system's countless individuals. To ensure high performance, the team made a decision to program model scoring offline and after that serve the outcomes once the individual logs into their gadget.

Modern Content Management: A Breakthrough for Modern Web Projects

It also aided the business maximize cloud facilities costs. Ultimately, successful AI design implementations come down to having efficient processes. Much like DevOps concepts of continual integration (CI) and continuous shipment (CD) enhance the implementation of routine software program, MLOps increases the rate, effectiveness, and predictability of AI design releases. MLOps is a collection of actions and devices AI growth groups use to create a consecutive, automated pipeline for releasing new AI remedies.

Table of Contents

- – Strapi CMS: A Game-Changer for Scalable Digita...

- – Evaluating Performance: Key Measurements for P...

- – Optimizing the Art of Information Strategy in...

- – The Investment Model of Bespoke Online Projec...

- – Leveraging Modern Development for Performance

- – Modern Content Management: A Breakthrough fo...

Latest Posts

Understanding Auto glass in the Mental Health Industry

How Machine Learning Reduces Development Time while Budget

Exploring the Benefits of AI-Driven Website Creation in the Current Landscape

More

Latest Posts

Understanding Auto glass in the Mental Health Industry

How Machine Learning Reduces Development Time while Budget

Exploring the Benefits of AI-Driven Website Creation in the Current Landscape